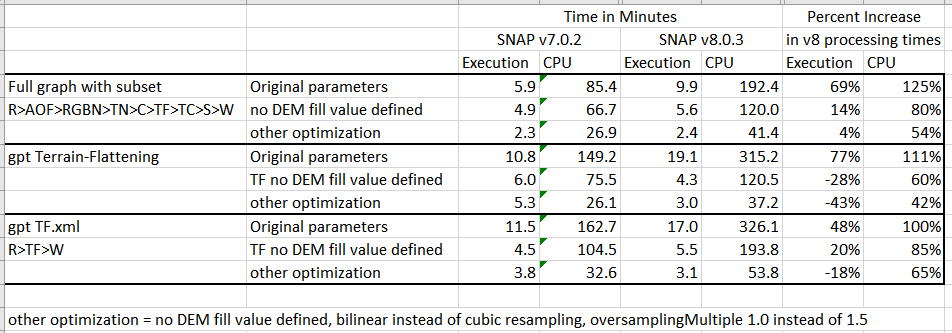

I was not able to identify any specific format, set of parameters, or config file changes that effect the relative performance difference between v7 and v8 for Sentinel-1 geocoding (the first graph below). Performance of the individual modules tested was relatively the same between v7 and v8, with exception to Terrain-Flattening. When including Terrain-Flattening v8 is uses ~twice the CPU time as v7.

The “other optimization” parameters should provide an acceptable compromise solution for migration of production to v8, that results in increased production 2x over v7 with original parameters.

This seems like a bug: Adding externalDEMNoDataValue more than doubles the execution and CPU time of gpt Terrain-Flattening and gpt TF.xml. Leaving this out in areas near sea level could result in issues since this defaults to 0.

gpt Terrain-Flattening beats CPU utilization of gpt TF.xml by a fair amount. They should be nearly identical since both read and write BEAM-DIMAP files. Is this an example of graph overhead?

The full graph with subset refers to:

Writing an intermediate product after Calibration, and then running:

Terrain-Flattening only from gpt command line: gpt Terrain-Flattening -Ssource=.......

and a Terrain-Flattening only gpt script, below, from command line: gpt TF.xml

Original parameters uses these TF parameters:

<demName>External DEM</demName>

<demResamplingMethod>BICUBIC_INTERPOLATION</demResamplingMethod>

<externalDEMFile>n42w108_dem.tif</externalDEMFile>

<externalDEMNoDataValue>-32768.0</externalDEMNoDataValue>

<externalDEMApplyEGM>true</externalDEMApplyEGM>

<outputSimulatedImage>false</outputSimulatedImage>

<additionalOverlap>0.0</additionalOverlap>

<oversamplingMultiple>1.5</oversamplingMultiple>

TF no DEM fill value is with the same parameters above, without defining externalDEMNoDataValue.

Other optimization uses these TF parameters:

<demName>External DEM</demName>

<demResamplingMethod>BILINEAR_INTERPOLATION</demResamplingMethod>

<externalDEMFile>n42w108_dem.tif</externalDEMFile>

<externalDEMApplyEGM>true</externalDEMApplyEGM>

<outputSimulatedImage>false</outputSimulatedImage>

<additionalOverlap>0.0</additionalOverlap>

<oversamplingMultiple>1.0</oversamplingMultiple>