Dear all, I was wondering if this issue could be resolved. The problem is that importing the vector shape file from the GUI and from the GPT command line seems different. To clarify, in the GUI all the vectors are imported while in GPT only the first 50 polygons are loaded. This seems really strange.

Did you test if the vector partially invalid geometries? Could be that these are filtered (or not recognized) by the GPT while these are fully loaded by the SNAP GUI. Just a guess and not fully targeting your question but it could help to narrow it down why the results are different.

Dear ABraun, the shapefile is a modified version of OSM, Iit should be ok. Here it is (https://zenodo.org/record/6992586#.YwiuES8QPm0). My impression is that only the first 50 polygons are loaded with GPT.

That is a lot of single features (n=71520).

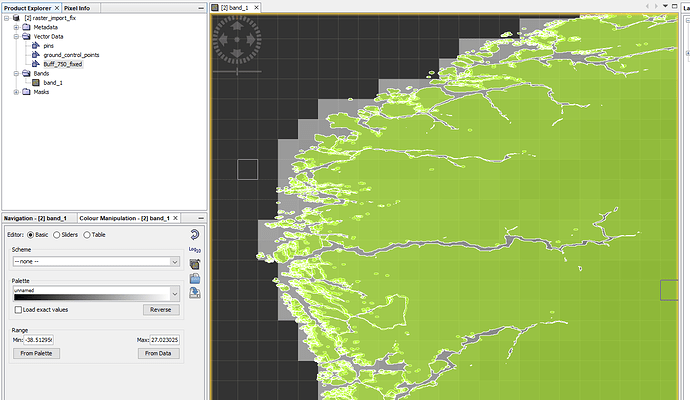

Unless you need them explicitly, have you considered disabling “Seperate Shapes” in the GPT import?

Worked for me at least.

Otherwise, running a Dissolve before importing could also help to get all geometries into SNAP via GPT.

Thank you ABraun for your valuable suggestions. I am already using the disabled option for “Separate Shapes”, and it did not solve the issue. I can agree with you, but sometimes it works and sometimes it does not works. I think it depends on the polygons limits.

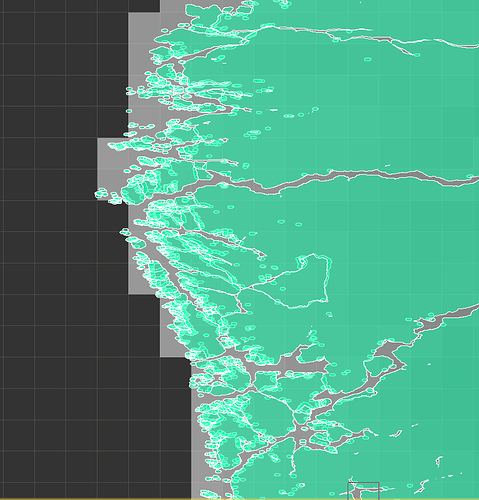

I have noticed this strange behavior which is different from the GUI operation where it also requested the crs to be picked. I don’t get what you mean for “Dissolve” but I have tried to merge all the polygons with geopandas and obtained a single geometry MULTIPOLYGON. However, in that case the GPT graph does not recognize the shapefile within the product limits where the GUI perfectly stitches the shp on the product.

Please find here two versions of the shapefile, one was fixed for invalid geometries the other was dissolved in QGIS (one multipolygon instead of thousands of single ones).

Would be interesting if this makes any difference for the GPT import or if it is just a (random) memory thing in the end.

I will give a try and I will let you know as soon as I can. Thanks for the help ABraun.

OK, no one of the two worked for me. The error it gets is “Unable to parse geometry” and only 50 polygons are loaded.

Any other suggestion?

In the distant past, I have encountered shapefiles too complex for the capabilities of the computer system I was using. There are robust algorithms to simplify complex polygons. Today, any GIS package should offer tools to validate and simplify shapefile polygons. In some cases, shapefile processing may fail with certain projections and not others.

You should check the memory settings for GPT on your system. You may need to shut SNAP down to release memory before using GPT. Using OS tools to monitor processor load and memory usage while running GPT should tell you if there is a resource limit.

I don’t get this error, neither with the original data nor with the one fixed in QGIS.

Seems to be related to your machine then and not with SNAP or the data in the first place.

Do you use the latest version of SNAP?

Yes, I am using SNAP 9. I wonder if it depends on the memory of my workstation. I have 16 GB of RAM. Anyway this issue does not appear from the GUI ( file > Import > ESRI Shapefile) but only with the GPT. Really strange to me.