Hello,

I have been performing offset tracking and the result produced after dem coregistration shows blank areas in some parts. I have tried using different DEM and external DEM still it fails to do so. Can anyone please help me out as to how I should get good result. I have attached my file below.

Maybe those areas have no point match. Or the velocity is too high here.

You can change the window size or increase the maximum velocity.

okk thank you sir, I shall try and check the results.

Results’ quality is very random. For some pairs it works, for others not.

Also, be sure only to use only images separated by 12 days.

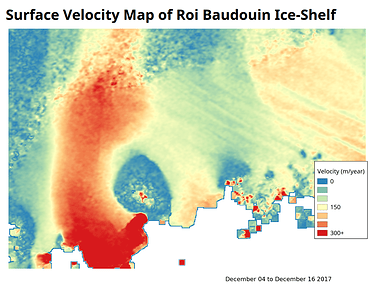

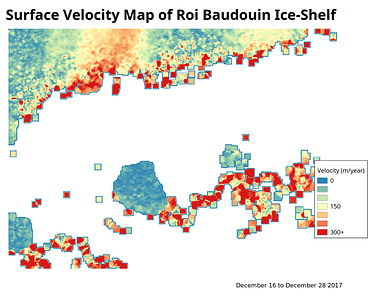

Here’s an example with two successive pairs that gave me totally different results.

What I usually do is to collect all images and then perform offset tracking in a chain process.

Here’s my graph : myGraph.xml (3.7 KB) (don’t forget to modify the DEM-Assisted-Coregistration where I use my own DEM)

And here’s the shell file I use to launch the process : offsetTracking.sh (1.4 KB) (idem, don’t forget to replace names with your owns)

Thank you so much for the reply.

Can I perform the chain process directly using tools>batch processing?

How can I use the xml and shell file in snap? I am sorry it might sound silly but I am new to snap and would want to kno if I can do coding in snap.

Two ways :

-

Using SNAP :

In the graph builder, you have a load button so you can import the .xml file. Then you can easily modify every tab to fit your needs.

Problem : you have to launch it for every pair. If you use the batch processing, the first image will be selected as reference so you will compute offset tracking for 12days, 24 days, 36 days and so forth. That’s not what you want because you’ll have more and more gaps as the temporal baseline increases. -

Using the Graph Processing Tool (gpt)

SNAP has a very friendly user interface with buttons, visualization and so on.

However, you can do “everything” with commands. It is very useful to automate the process. What I tend to do now is to create a set of operations in a shell file (if you use linux) that I launch in a terminal.

If you’re interested, I suggest you to read some materials here :

https://sa.catapult.org.uk/wp-content/uploads/2016/05/SNAP-Sentinel-1_TrainingCourse_Exercise4.pdf

and

https://senbox.atlassian.net/wiki/spaces/SNAP/pages/70503590/Creating+a+GPF+Graph

and

https://senbox.atlassian.net/wiki/spaces/SNAP/pages/70503475/Bulk+Processing+with+GPT

Thank you so much sir. I shall go through the materials and then process accordingly.

Hello Sir,

I have 3 questions:

1-Could you please tell how to show velocity vectors in the velocity map?

2-Do the offset tracking function in SNAP works well with other types of land deformation (Land slide, earthquakes...) ?

3-Have you ever perform offset tracking using SNAP to other data sources like ALOS-2 L1.1 SLC data, or L1.5 Range Detected data? It will be really helpful if you could offer a processing chain for this.

Thanks in advance!

-

Your answer is on page 15 of the official tutorial

S1TBX Offset Tracking Tutorial.pdf (1.6 MB) -

Offset Tracking is mostly used in glaciology because of very rapid flow (>100 meters per year). It means that if you locally move a pixel from the slave image, you can find the corresponding pixel from the master image. In land, subsidence is far too slow to be seen by local cross correlation of images.

In bigger movements like landslides, the surface may be too much deteriorated to find any correspondence between the two images.

So I would say no. If anyone has a counter-example, I’m very interested. -

On our premises, we developed a SAR/InSAR Suite that can do that. However, due to intellectual property issues, I cannot offer you what you ask.

Thanks for your reply.

Now I have another question. When we do offset tracking, Range Detected data or SLC data should be used? S-1 GRD data is 1:5 multilooked, the spatial resolution is 10 m. ALOS-2 L1.5 data is 1:2 multilooked with 6.25 m spatial resolution.

We know that offset tracking result is sensitive to spatial resolution, and SLC data own higher resolution in both Range and Azimuth.

So what I want to figure out is: Should we use the SLC non-multilooked data, or the processed L1.5 data (GRD for S-1). Because in SNAP, we can convert COMPLEX data to DETECTED data.

Thanks!

SNAP cannot use SLC data for OffSet Tracking but GRD data. That’s a problem for 2 reasons :

-

As you mentioned, the technique is resolution dependent. Dealing with already multilooked images is bad because you won’t be able to acquire fine results.

-

The multilook and speckle filtering used to produce GRD data is again, sad, because in SAR, the deterministic noise due to the speckle is actually a relevant pattern we want to exploit in tracking methods (that’s why it is called speckle tracking). SNAP does not perform speckle tracking strictly speaking.

CORRECTION : GRD data available on the copernicus sci-hub haven’t been speckle filtered (though the SLC2GRD pre-defined graph contains a speckle-filter module). However, the multilooking still destroys an important part of the speckle pattern.

To be honest, I don’t really understand the reason. Offset Tracking is “simply” a local cross correlation of images. The method would be so easily implemented to deal with both GRD and SLC (a priori debursted). I would really appreciate any clarification. Maybe @marpet ?

However, like you say, it is possible with the SRGR module to create your “own” GRD data so you can remove speckle filtering/multilooking. Nevertheless, the projection from slant range to ground range still involves a interpolation process, which can alter in a bad way some patterns. The ideal process would be to first do a speckle tracking then projecting the results in ground range.

Yes, speckle tracking currently only supports GRDs, which are multilooked & detected but not speckle-filtered, so the deterministic speckle-noise (without the phase-component) is still there to be exploited, admittedly at a lower resolution than in the SLCs. We had a requirement on the development of coherent speckle-tracking from SLCs but that was dropped and higher-priority functionality was developed instead.

I completely agree. With coherent speckle-tracking in slant-range the phase would need to be treated appropriately. Unfortunately I cannot promise that this will be implemented in the near future…of course if someone developed it in Python or Java we could take it on board quite easily… ![]()

Thanks for the answer

I’ll probably have to develop the technique myself. If i’m allowed to share, I will ![]()

Mmm. I’m not sure to understand. Do you have any references or technical documentation?

Hi qglaude!

Many thanks for the effort you spent on my questions.

I am now concentrating on a chemical explosion case happened in China. For this sudden and fast moving case, InSAR offers few information so I try to find some help by using offset tracking. I am majored in InSAR and new to SAR offset tracking. I would not spend too much time and do detail research on Offset tracking. I am just finding a software that is robust and trustworthy to perform this analysis.

Considering all your answers, can I personally say that, SNAP may not be an ideal tool (or an expert) to do offset tracking? Although in the "Offset tracking tutorial", there is an example using S-1 GRD data, but, at the end of the tutorial, I mean the visualization step, this software cannot offer displacement in two directions respectively while we sometimes care about these separated movement more.

Do you happen to know some software or tools that can be fetched and more expert on SAR offset tracking? I just know COSI-CORR, developed by JPL but it seems work with optical images only. Could you please give me some suggestions on which tools or software to choose?

Thanks!

SNAP performs offset tracking well. The “problem” is that it only uses GRD data. I still get good results with it though. But it is not speckle tracking strictly speeking.

In your case, I’m a bit doubtful. Offset tracking techniques in SAR is mostly used in glaciers displacements. But just try it. If you have any results I’m really interested.

I cannot give you any software names. I mostly use a software developed in our company and SNAP. However I can give you this link which gives you tons of open and commercial softwares. (You need to e registered )https://eo-college.org/topic/goodies/

Sorry for the late response. I really appreciate the experience you share! And, I will let you know about my offset tracking news on the explosion case as soon as I get reasonable result.

Best wishes!

Is it possible to perform offset tracking in snap using other satellite products except sentinel?

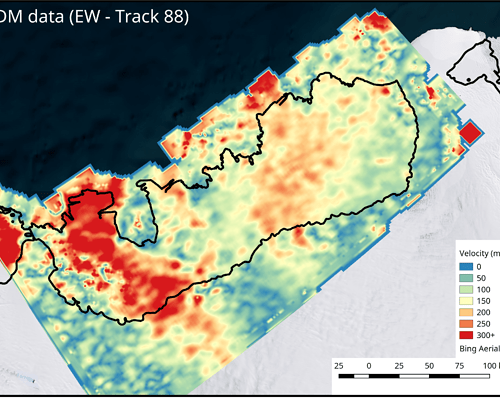

Can some one guide me on whether I can use EW instead of IW for Offset because when I do so I get gaps like the ones in this question. I have tried increasing the velocity up to 10.0 m/d and window size up to 512.