Dear all

I have 413 Sentinel_1 data (I used subset of data) and I want to stack them with snap gpt. I tried to use this command:

gpt /scratch/project_2004361/codes/stkraph2run.xml /scratch/project_2004361/codes/data_list.txt **

** /scratch/project_2004361/results/Stack_All.tif

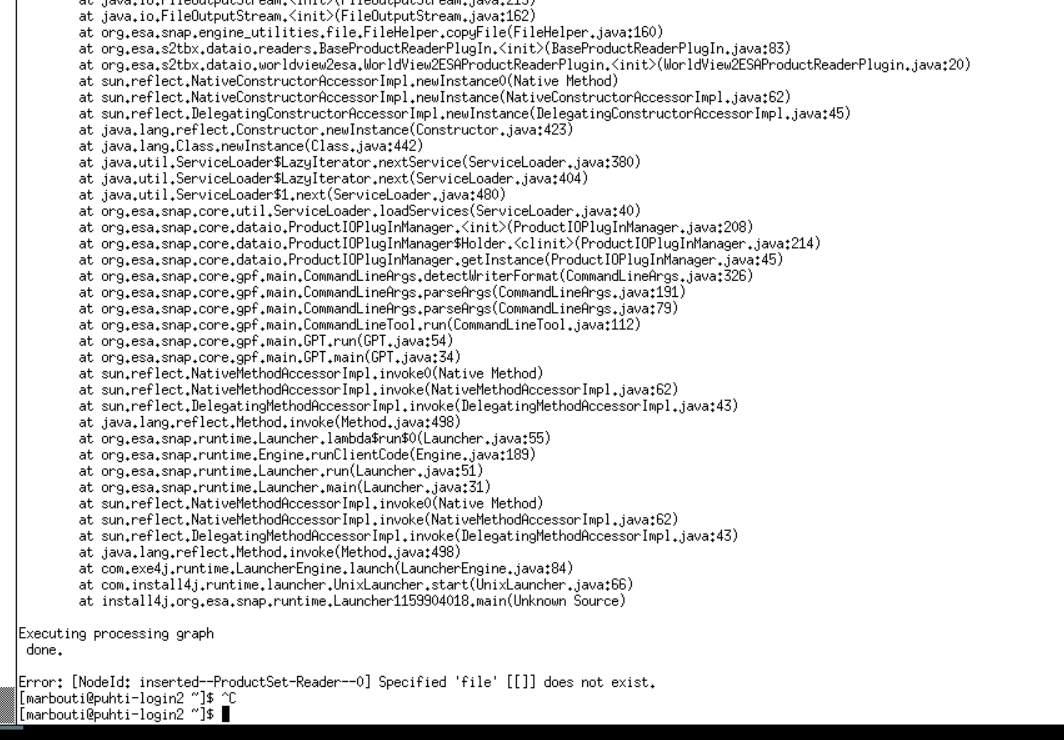

I got an error that says:

Error: [NodeId: inserted–ProductSet-Reader–0] Specified ‘file’ [[]] does not exist.

I do not know why?

you can find stkraph2run.xml here.

stkraph2run.xml (41.3 KB)

Have you tried to remove the spaces in the fileList parameter?

Thanks. I modified it. You can find both list.txt and stkraph2run.xml (here is with 102 data) in herelist.txt (7.1 KB) stkraph2run.xml (11.0 KB) .

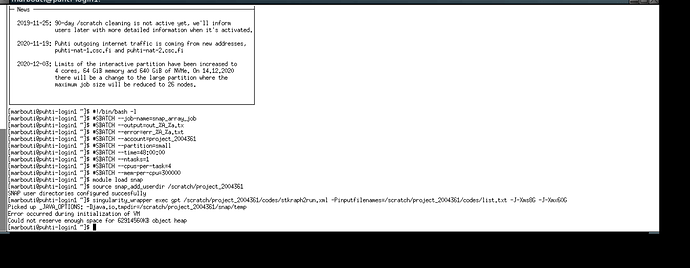

I connected to a server with a partition (small) with 382 GiB

Then I used below comments:

#!/bin/bash -l

#SBATCH --job-name=snap_array_job

#SBATCH --output=out_%A_%a.txt

#SBATCH --error=err_%A_%a.txt

#SBATCH --account=

#SBATCH --partition=small

#SBATCH --time=48:00:00

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=4

#SBATCH --mem-per-cpu=300000

Module load snap source snap_add_userdir /scratch/project_name

Singularity_wrapper ecec gpt /scratch/ project_name/codes/stkraph2run.xml –Pinputfilenames=/scratch/ project_name/codes/list.txt –J-Xms8G –J-Xmx60G

First time, I put 10 data in list.txt and used below command:

Singularity_wrapper ecec gpt /scratch/ project_name/codes/stkraph2run.xml –Pinputfilenames=/scratch/ project_name/codes/list.txt –J-Xms8G –J-Xmx60G

It worked.

So, I tried more data and I put 102 data in list.txt and again used below command:

Singularity_wrapper ecec gpt /scratch/ project_name/codes/stkraph2run.xml –Pinputfilenames=/scratch/ project_name/codes/list.txt –J-Xms8G –J-Xmx60G

But it said:

Error occurred during initialization of VM

Could not reserve enough space 62914560KB object heap

You also can see the error here:

Actually, I do not know what is the problem?

I think I should change Xms or Xmx or… but I do not know how can I do it in server ?

I would be thankful, if you can help me.

The last thing is coming to my mind is:

For first time, I run it for 10 data and I used below comments:

#!/bin/bash -l

#SBATCH --job-name=snap_array_job

#SBATCH --output=out_%A_%a.txt

#SBATCH --error=err_%A_%a.txt

#SBATCH --account=project_name

#SBATCH --partition=small

#SBATCH --time=48:00:00

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=4

#SBATCH --mem-per-cpu=300000

Module load snap

source snap_add_userdir /scratch/project_name

Singularity_wrapper ecec gpt /scratch/project_name/codes/stkraph2run.xml –Pinputfilenames=/scratch/

project_name/codes/list.txt –J-Xms8G –J-Xmx60G

It was ok and worked.

In my second try, as I put everything same but I used 102 data and I wrote this:

Singularity_wrapper ecec gpt /scratch/project_name/codes/stkraph2run.xml –Pinputfilenames=/scratch/project_name/codes/list.txt

I got this error:

Error: GC overhead limit exceeded

Look also attachment.

My idea:

In my idea, when I entered –J-Xms8G –J-Xmx60G for first time, now it is reserved or set to this number.

That’s why it cannot handle 102 data and says:

GC overhead limit exceeded

I tried to change it by commands like:

–J-Xms8G –J-Xmx300G

–J-Xms8G –J-Xmx200G

And so on …

But I got below error:

Error occurred during initialization of VM

Could not reserve enough space for 314572800KB object heap

Or similar to it.

I think it is set to –J-Xms8G –J-Xmx60G and I cannot change it.

Any idea?

This means that there is not enough free RAM. You said in another thread that you have 64GB of RAM. Probably more of 4GB is already occupied by other applications on your system. So, try a smaller value. Maybe, 55G.