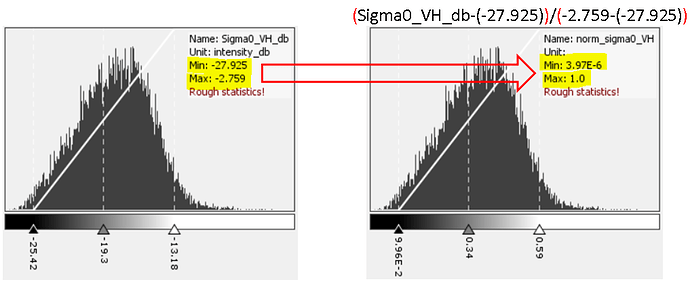

BandMath Expression used:

(Sigma0_VH_db - min(Sigma0_VH_db,Sigma0_VH_db))/(max(Sigma0_VH_db,Sigma0_VH_db)- min(Sigma0_VH_db ,Sigma0_VH_db))

Expecting : [Sigma0_VH_db - min(Sigma0_VH_db)] / [max(Sigma0_VH_db)-min(Sigma0_VH_db)]

Essentially, an output histogram in the range[0.0,1.0] as shown in the right image,below.

OBTAINED: NaN output

I just want min & max of band “Sigma0_VH_db” but the function expects 2 input parameters for say minimum as min(@,@) . Can you please throw some light on this as the help section isn’t clear. Thanks in advance!