Hi,

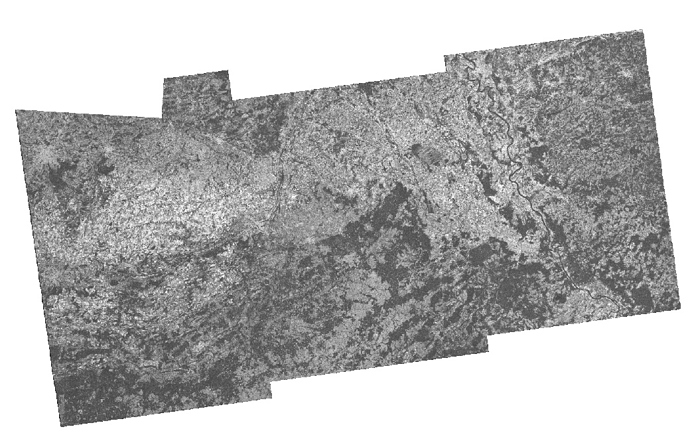

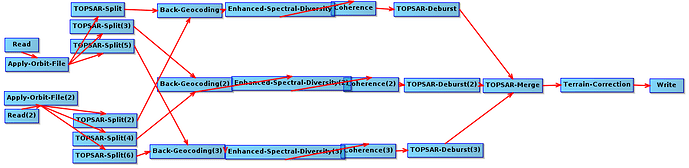

We’re experiencing some issues when we try to run GPT with the snap.gpf.useFileTileCache=true option on a Spark cluster. We use it to generate SLC Coherence products, but sometimes a part of the data is missing (as can be seen in the top-left corner of the picture below). The missing data problem doesn’t occur consistently, even on the same input data. So that rules out corrupt input data.

At first we thought that this might be related to the location of the file tile cache, and that Spark was removing files from the /tmp directory that were in use by the processing. So we tried to set the tmpdir inside the current working directory so that it doesn’t interfere with other processes. But still we’re seeing the same issue.

Here is the full list of parameters that we use: -XX:MaxHeapFreeRatio=60 -Dsnap.userdir=. -Dsnap.dataio.bigtiff.tiling.height=256 -Dsnap.dataio.bigtiff.tiling.width=256 -Dsnap.jai.defaultTileSize=128 -Dsnap.jai.tileCacheSize=6000 -Dsnap.dataio.bigtiff.compression.type=LZW -Dsnap.parallelism=16 -Dsnap.gpf.useFileTileCache=true -Djava.io.tmpdir=./tmp

Do we have to set any other parameters or do you have an idea what could be the cause of this problem?

Thanks in advance!