Hi,

I’m trying to understand what correction (mathematically) still needs to be applied to GRD images in order to project them to a regular WGS84 such that points in a rendered tiff align between different granules. Note that in this case, I’m monitoring the edges of islands, which are (approximately, and certainly to a greater degree than would explain the error I’m seeing) at sea level so there should be no need for DEM-based RTC correction.

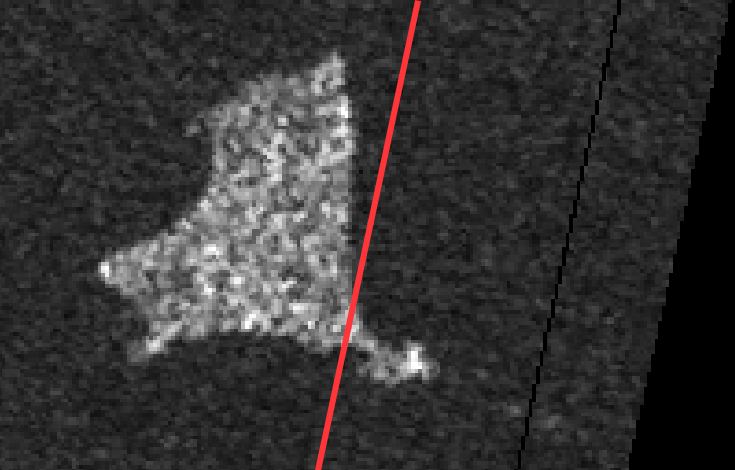

Take, for example, these 2 images, which are small portions on the eastern edge of

S1A_IW_GRDH_1SDV_20250309T213526_20250309T213551_058233_07324A_A474.SAFE

and

S1A_IW_GRDH_1SDV_20250321T213527_20250321T213557_058408_073938_D1AB.SAFE

8_D1AB.SAFE

The documentation about S1-GRD products is extensive, but they’re clearly not quite in WGS84 space. It looks like the azimuth axis is fairly consistent between captures on given track, but the range isn’t. If you then run SNAP’s Ellipsoid Correction->Average Height Range Doppler, everything looks correct afterward, but I’m trying to understand what that’s doing, and why S1 GRD images are in an almost-but-not-quite geographic coordinate system?

I’d like to fully understand how, ideally so I can implement code that handles the final step of projection without having to pick through everything RangeDopplerGeocodingOp.java is doing.

Internally to the vh/vv tiff, what we have is a 2D grid of pixels in range/azimuth space, and then a coarse-ish grid of tie points which map specific pixels to their corresponding X/Y/Z components in WGS84 latlon. Linear interpolation of world-space position is used between tiePoints, which are in about a 20x40 grid. I’m not quite sure why the tiepoints have an elevation but that seems relevant?

Can somebody explain algorithmically / mathematically what I’m missing? Specifically the errors in georeferencing seem to be entirely in the range direction, and are too large to be interpolation artefacts due to the tiepoint grid being relatively coarse (I also made sure that screenshot was close to a tie point, so it’s not just that!)

To be clear, understanding the math of what’s happening here is more important to me than constructing a processing chain - I know that applying range-doppler correction to all images aligns them correctly, but that’s slow given large numbers of captures, and in my case I expect some (ellipsoid only, not DEM) approximations or optimizations can be made.

Please note that I’m really a 3D graphics programmer by background not a space scientist. Apologies for any incorrect terminology used.