Hello, dear colleagues!

I work with data from the Sentinel-3 satellite system, namely Earth surface temperature (LST). This is SLSTR level-2 data.

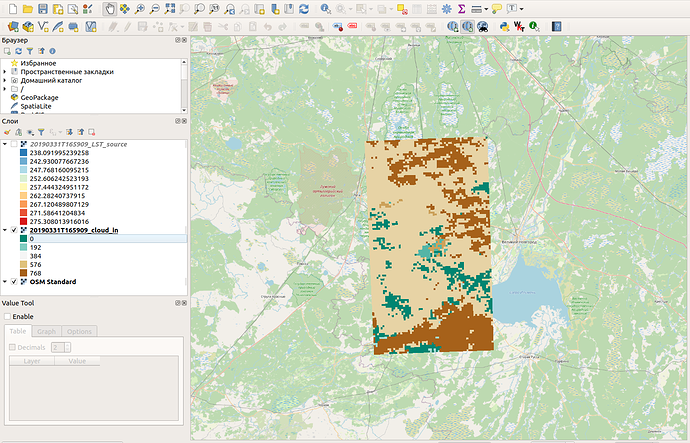

I have a problem - I can’t determine where the clouds were in the picture. In the source archive, I found a large number of additional matrices that can be used. The layer most similar to a mask of clouds seemed to me - “flags_in” - “cloud_in”.

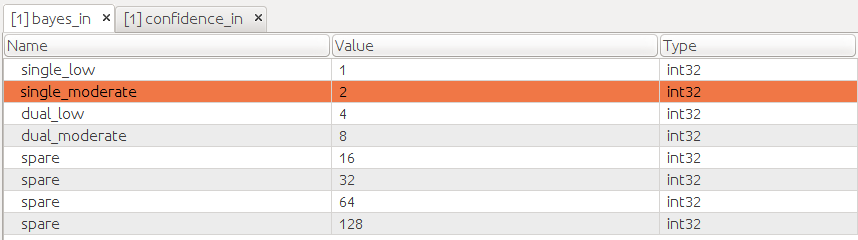

But the problem is that I could not find any information about what codes mean what. The decryption that is presented in the preview function on the web service https://scihub.copernicus.eu does not correspond to reality: there are codes in the layer for which there is no description. For example, for cloud_in there are notations: 1 2 4 8 16 32 64 128 256 512 1024 2048 4096 8192 16384 32768, but I have codes 0, 768 …

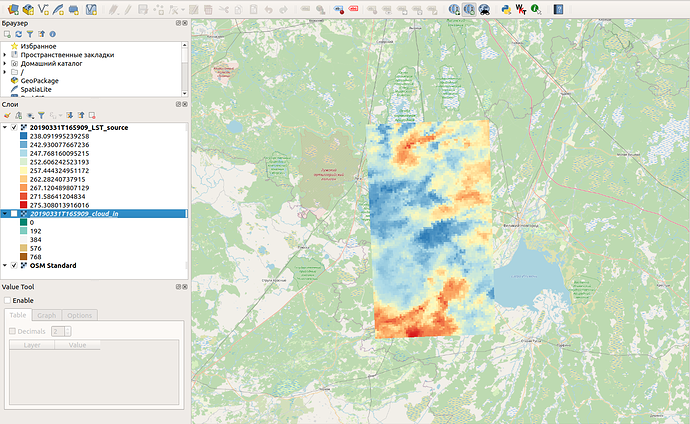

I decided that perhaps all values that differ from 0 in the cloud_in matrix are clouds, and with this cloud mask I cut off all those pixels on the LST matrix that have a code other than 0. But when I checked, I found that with the received there is something wrong with the layers of temperature. For example, I have an image for a territory located a little south of St. Petersburg (Russia) on March 31, 2019. And almost all the temperature in the image is much lower than 0, in some pixels - -33 degrees Celsius.

The temperature in the picture is measured in degrees Kelvin.

This certainly could not be. Even in all the images, the temperature amplitude is very very large, sometimes it can change by 30 degrees in one pixel in a day. Moreover, the temperature of water bodies (surface water bodies) is in many cases negative.

I checked the Sentinel images with the MODIS images, and it turned out that MODIS did not catch anything like that, everything was quite good - the water temperature was above 0, etc.

Please help me deal with this issue. Maybe I’m somehow not marking the clouds in the images. What layer (and what values in it) do I need to use for the cloud mask?

Or is it because of satellite, and such strange results are a feature of the sensor?

P.S. I work with files using Python