@ABraun, could you please write how much time was spent on treatment in both cases? ( I mean for Single Lee Sigma (1 source band, Lee Sigma, 1 Look, Window 7x7, Sigma 0.9, Target 3x3) and

Multi-temporal Lee Sigma (15 source bands, Lee Sigma, 1 Look, Window 7x7, Sigma 0.9, Target 3x3))

sorry, I can’t remember. I used a machine with 32GB RAM so it only took a couple of minutes in all cases.

Oh really? I used 16 GB and core i7 mac book and 1 cycle in batch takes about 15 minutes for 8 products (VV VH). Without multi speckle filtering it took <1 min per one cycle.

(Read - ApplyOrbitFile - ThermalNoiseRe - Calibr - MultiSpeckle - TerCorrection - LinearToFromdB - Write)

i didn’t use the batch mode, I was only referring to the multi-temporal speckle filter.

ABraun - Could you explain how you got those coregistered S1 files into one product, as shown in your Sep 23 screen shot of the Bands in your product? I’m trying to play with multitemporal filtering and that part is eluding me.

I can coregister two S1 files at a time, but how can I combine several such products into one?

The Radar > Coregistration > Coregistration dialog throws an error when it senses multiple inputs as S1 TOPS files, so that doesn’t work.

The S1 TOPS Coregistration dialog only works with two files at a time.

I can create a 2-file coregistration workflow and open it in the Batch Processor along with all of the input files in my time series, but I’m not sure which master it is selecting and the output products have strange names. *** AND *** the burst that I’m trying to coregister has different numbers for different collects in my time series!

I apologize, I know the answer is probably easy, but I haven’t found it on my own.

Thanks,

Tom

to be honest, I used GRD data. So I added all of them in the coregistration dialogue and it worked.

I’m not sure how to do it with multiple TOPS files, sorry. But I’ll have a try later.

Think I got it. With three separate S1 SLC files, make a Workflow that coregisters them all (everything to the left of and including the Back-Geocoding box below), then pass the results to MTSF (with an intermediate debursting step required). The Terrain Correction is just there to help me visualize things.

The output is a product like that below, which is what I expected (with the 20Apr collect being the master and the two other collects being slaves):

Assuming this is correct (and if I’m wrong, somebody please speak up), I’d like to respectfully opine that discovering this process was a bit harder than it needed to be. If the MTSF dialog would accept Bands that weren’t in the same product, this would have been easier to do. If the Stack Creation dialog would allow S1 TOPS SLC products, ditto. If the S1 TOPS Coregistration dialog would allow more than two products to be coregistered, ditto. If SNAP had some easy method to simply copy a band from one Product into another, ditto.

Again, I hate to complain, but yikes - I tried a lot of things before luckily hitting on one that worked. I’m not the sharpest wavelength in the spectrum, but for such a simple, seemingly common, task, that took a while to discover.

Fingers crossed that I actually did it right

Thanks,

Tom

thanks for showing. Good luck - I would be interested in the results.

Maybe one hint lveci gave yesterday in a similar topic

So, if it takes too long, you could stop after the back geocoding and start the debursting separately.

Good morning, I am trying to create a graph as @tqrtuomo, but I have this error : “Please select two source products” that appears in the back geocoding step: Maybe I have to change one of the parameter but I don’t know which one to choose, if someone could help me.

Thanks

Prior to version 5, the backgeocoding only allowed 2 input products. Update to version 5.

Thanks a lot for your answer, I updated my version and the backgeocoding works.

At the step of the multi temporal speckle filter when I choose the different bands I want to analyse from the different products this error appear:

Operator ‘MultiTemporalSpeckleFilterOp’: Value for ‘Source bands’ is invalid.

What kind of values should I use ?

Hi ABraun,

first thanks for this great comparison … very interesting and helpful.

I was just curious about your snap/gpt setup. You said with your machine (32G RAM) the processing of 15 S1 images only took a couple of minutes. I have the same amount of RAM and the same number of S1 images I’d like to process. and I’m really struggling to find a setup that would work for me.

Also, I suppose if you’d go on to use the co-registered and filtered stack, you would need to apply the Stack-split operator. Do you have any experience with that? Even with 32GB of RAM, I constantly get a data buffere error.

Thanks!

Val

for this case I used GRD products, so no TOPS Deburst or Split was necessary. The ‘couple of seconds’ referred to the filter only.

I didn’t change any of the memory options.

I understand, I’m also using solely GRD products.

In your case, how long did the Co-registration take for 15 products?

As for the split, I was more thinking about the steps required for the processing after that. In this case, when you have your co-registered and filtered stack, to revert back to single files for Terrain Corr. etc.

Thanks

the co-registering surely took some time, I don’t remember but it could have been 120 minutes.

You can proceed with the whole stack, for example the terrain correction. I don’t see any sense in working with the single images again as long as you are not interested in one in peculiar.

Do you have any suggestions for a minimum number of datasets for a good result using multitemporal filtering? I haven’t found any information in the literature. Or is there nothing like a rule of thumb, because every new dataset has an impact on the result?

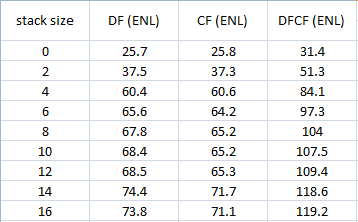

I tried to find out this number by varying the size from 2 to 16 in a step size of 2 (adding another date with VH and VV polarisation). For each result I computed the ENL of samples in the forest (homogeneous areas), always based on the master dataset (VH, 29Aug2016, Gamma0, db). All datasets are preprocessed: (1) Thermal noise removal, (2) Orbit, (3) terrain flattening (SRTM1sec), (4) RD Terrain Correction, (5) Coregistration (Stack, Orbit).

DF = Deciduous forest samples

CF = Coniferous forest samples

DFCF = Samples of both forest types (different areas)

edited table:

Zero means that no (multitemporal) speckle filter was applied.

According to this test I would use a minimum number of 8 or 10 datasets.

Best regards!

I also didn’t see any empirical numbers but just from guessing I would agree that the filter performance reaches good quality with 8 images or above. It depends on the character of surfaces a bit so if there is generally higher speckle, more images could surely help to statistically reduce this effect.

I would say the minimum number of images is 2. As your results show after a certain number the extra improvement becomes very small. Also, if there are large changes in the area of interest (new buildings, flooding etc.) during the temporal stack the filtering will cause smearing, so it does not make sense to put an infinite amount of images in the filter.

But the speckle reduction isn’t good by using just both polarisations of a dataset as input. The outcome reaches a good level of speckle reduction by using 8 datasets (visual interpretation). So for the application of tree species classification/ biomass estimation using as many as possible datasets isn’t expedient?

Could you show me an example for that kind of problems please? I am thinking of using the stack size as a temporal window for applying the multitemporal speckle filtering. So e.g. each dataset would be filtered using 7 additonal datasets.