I tried to correct the Java Heap Space error and GC overhead limit exceeded error that appears when i compute my code to obtain backscattering and incident angle of a product through snappy library by doubling my Virtual Machine RAM up to 64GB. I modified the following files to increase the memory of the computation but it still run below 32 GB:

.snap/snap python/build/lib/snappy.ini (java_max_mem: 62G)

.snap/snap python/snappy/snappy.ini (java_max_mem: 62G)

Program Files/snap/etc/snap.properties (snap.jai.tileCacheSize=62000 snap.jai.defaultTileSize=8192)

Program Files/snap/etc/snap.conf (default_options="–branding snap --locale en_GB -J-XX:+AggressiveOpts -J-Xverify:none -J-Xms4G -J-Xmx62G -J-Dnetbeans.mainclass=org.esa.snap.main.Main -J-Dsun.java2d.noddraw=true -J-Dsun.awt.nopixfmt=true -J-Dsun.java2d.dpiaware=false"

)

Prgoram Files/snap/bin/gpt.vmoptions (-Xmx62G)

Prgoram Files/snap/bin/pconvert.vmoptions (-Xmx62G)

What have I forgot to change?

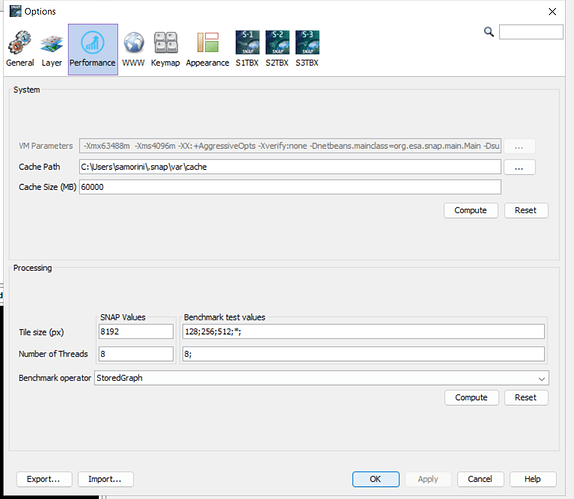

I tried also to change Performance (Tools>Options) from SNAP

but it still run below 32 GB… What did i do wrong?

Thanks