Hello Everyone,

I would like to change/ deteriorate the NESZ of Sentinel-1 data to perform PSI processing on the modified (noisy) data using the SNAP/ StaMPS workflow.

Summary

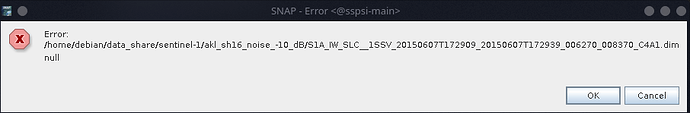

I have written some Python code (using snappy) to read S1 IW-products in SAFE format, calibrate them, add noise, and save the products in BEAM-DIMAP format. However, when I load the products into SNAP to look at the Interferometric Stack Overview, I get this error message:

The error message pops up for every product in the data set.

My workflow

I have written a python script which iterates over all S1 products in a dataset, and does the following:

-

unzip product

-

perform calibration and save calibrated product in BEAM-DIMAP (

outputImageInComplex=True) -

create a new product with the same bands as the calibrated product. The i- and q-bands are “real” bands, whereas the intensity band is a virtual band with the following expression:

expression = "i_IW1_VV * i_IW1_VV + q_IW1_VV * q_IW1_VV"The number varies from subswath to subswath, of course.

-

load calibrated product and add noise to all i- and q-bands.

-

Save new product in BEAM-DIMAP.

Below is the code I use for steps 3.-5.

# Parse command line

args = _parse_args()

# Read source product

source_product = su.read_product(args.source_product_path)

source_bands = list(source_product.getBandNames())

w = []

h = []

for band in source_bands:

sb = source_product.getBand(band)

w.append(sb.getRasterWidth())

h.append(sb.getRasterHeight())

# Create new product

width = max(w)

height = max(h)

target_product = snappy.Product(

source_product.getName(),

source_product.getProductType(),

width,

height,

)

# Copy metadata from source to target product

snappy.ProductUtils.copyMetadata(source_product, target_product)

snappy.ProductUtils.copyGeoCoding(source_product, target_product)

# Set product writer

WRITE_FORMAT = "BEAM-DIMAP"

target_dir = args.target_product_path

target_product.setProductWriter(

snappy.ProductIO.getProductWriter(WRITE_FORMAT)

)

target_product.writeHeader(snappy.String(target_dir))

# Add and configure target bands

nodata_value = np.nan

for band in source_bands:

print(f"Processing band {band}...", file=sys.stdout)

if "Intensity" not in band:

target_band = target_product.addBand(

band, snappy.ProductData.TYPE_FLOAT32

)

target_band.setNoDataValue(nodata_value)

target_band.setNoDataValueUsed(True)

target_band.ensureRasterData()

source_band = source_product.getBand(band)

w = source_band.getRasterWidth()

var = 10 ** (args.NESZ / 10) / 2

std = np.sqrt(var)

print("Setting pixel values...", file=sys.stdout)

for y in tqdm(range(height), file=sys.stdout):

data_source = np.empty(w, dtype=np.float32)

data_source.fill(nodata_value)

data_target = np.empty(width, dtype=np.float32)

data_target.fill(nodata_value)

try:

source_band.readPixels(0, y, w, 1, data_source)

noise = np.random.normal(0, std, data_source.shape)

noise[data_source == 0] = nodata_value

data_target[:w] = data_source + noise

except RuntimeError:

pass

target_band.setPixels(0, y, width, 1, data_target)

else:

iq_names = [

f"{comp}_{band[band.find('IW'):]}" for comp in ["i", "q"]

]

expression = f"{iq_names[0]} * {iq_names[0]} + {iq_names[1]} * {iq_names[1]}" # noqa

target_band = target_product.addBand(

band, expression, snappy.ProductData.TYPE_FLOAT32

)

target_band.setNoDataValue(nodata_value)

target_band.setNoDataValueUsed(True)

print("Writing data to file...", file=sys.stdout)

pm = su.createProgressMonitor()

snappy.ProductIO.writeProduct(target_product, target_dir, WRITE_FORMAT, pm)

target_product.closeIO()

Note that I set the width and height of the target product to the corresponding values of the largest subswath in the original product. I read the snappy guide and the snappy examples, and I thought I had to set the product width and height to

width = source_product.getSceneRasterWidth() # often the target product dimensions are the same as the source product dimensions

height = source_product.getSceneRasterHeight()

If I do that, however, each subswath has the dimensions of the whole product, and the target product will be 9-10 times larger (in terms of storage) than the original product.

Also, I had to restructure the individual steps into subprocesses, as otherwise I would run into memory issues. Shoutout to @Ciaran_Evans for his solution. I changed to a bigger machine now (128GB RAM, 24CPUs instead of 32GB, 8CPUs), but I haven’t checked whether the procedure would work in a single process now. Anyway, this is a side note and I don’t think the end result would depend on whether I use subprocesses or not.

When I run the steps explained above, I end up with a dataset that can actually be opened in snap, and the NESZ has changed to the desired value. I can also add the products to the Interferometric Stack Overview menu by pressing “Add Opened”. But when I click “Overview” I get the error message shown in the Summary above.

I thought the error could be caused by the BEAM-DIMAP format (because until now I have only fed SAFE data to the SNAP/ StaMPS workflow). Thus, I tried to get the Interferometric Stack Overview of the calibrated data set, which is also saved in BEAM-DIMAP, to see if I get the same error: I don’t. Instead, the Interferometric Stack Overview menu does not even appear under Radar -> Interferometric, where it is usually located.

Conclusion

In essence, I would like to have a Sentinel-1 data set, which is completely identical to the one I downloaded from Copernicus, except for the NESZ/ noise level. I want to be able to use the modified dataset in exactly the same way as the original one.

Does anyone have any idea if and how I could do that? Is my current approach close, or should I do something completely different? I was thinking to not use snappy at all, and work directly on the geotiffs in the SAFE directory. I wasn’t sure how to implement the calibration though, so I thought using snappy would make things easier for me.

Also, this is my first post in the step forum, so I hope my post conforms with the guidelines and requirements. If not, I’d appreciate feedback :-).

Miscellaneous

-

SNAP Version 8.0

-

Debian machine with 128GB RAM