Hi Marco,

Would you please to give your opinion, I’m a bit confused, the machine was 16 GB RAM and 1 TB HHD, the creating coherence graph of two SLC images with multilook it took a few seconds to one or two minutes,

Now the machine 32 GB RAM and 2 TB SSD,

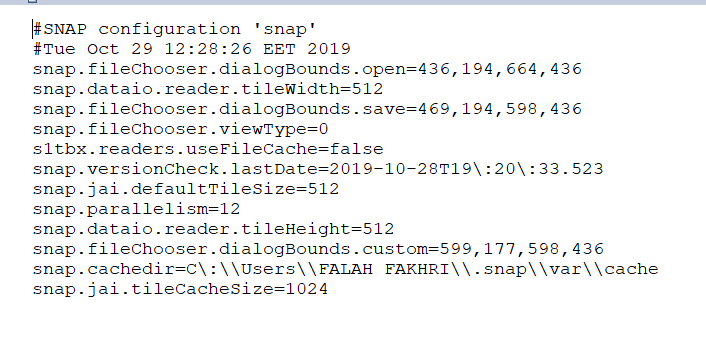

The same process takes more than 10 minutes, The SNAP is reinstalled and this is the snap.properties from snap.

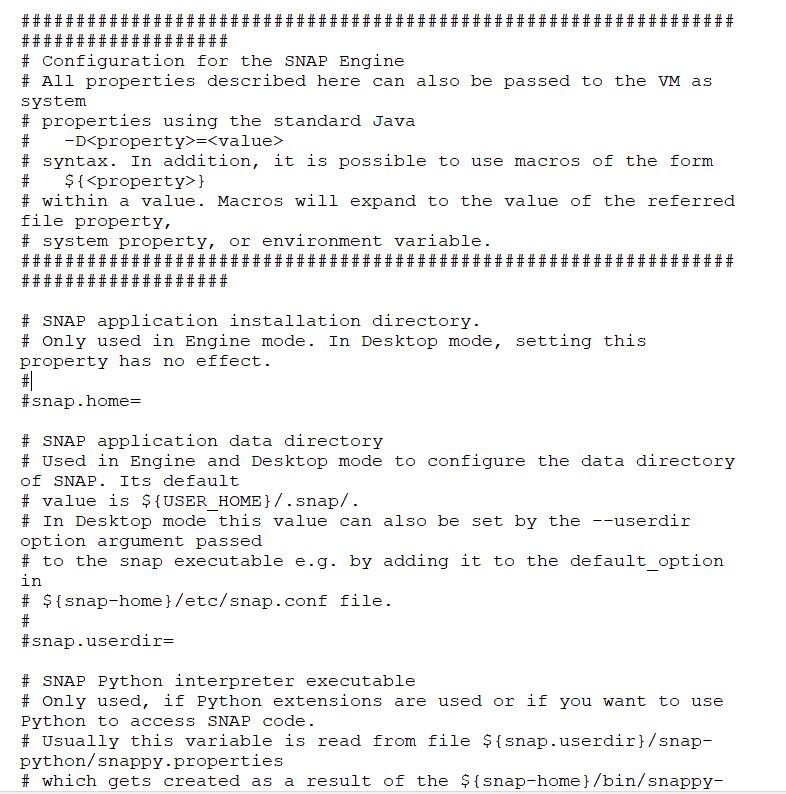

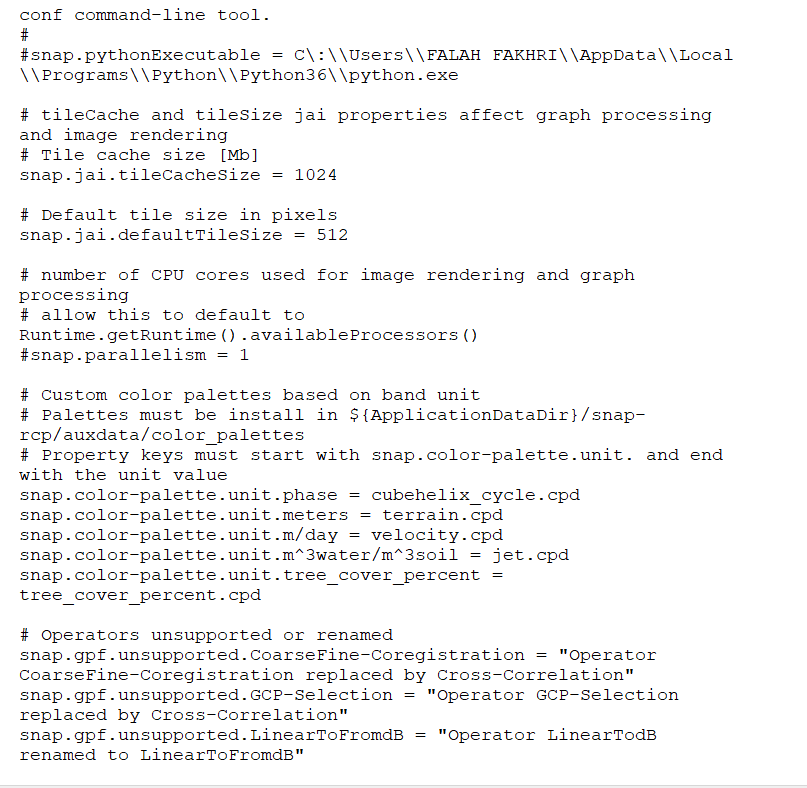

and the following is the SNAP properties from SNAP\etc

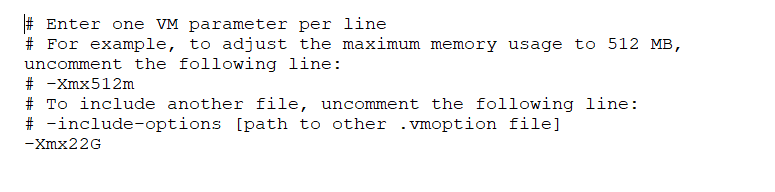

and this is the gpt.vmoptions,