Please help me to clarify the terms and how does the algorithm work.

Grid Azimuth/Range Spacing means the size of a reference patch/‘chip’/‘template’ in master (reference) image. Every GCP on the grid is associated with a reference patch, which is centered at that GCP.

Registration Window means a search area on a slave image, located around the GCP under question and having that GCP as the center-point. A reference patch ‘moves’ step-by-step inside the search area. Step length equals 1 pixel (=10m). Cross-correlation is calculated at every step. A patch of the search area matches the reference patch if both have maximum cross-correlation. Is this correct?

If yes, I have next questions.) For example, what if maximum correlation value, let say 0.3, exceeds cross-correlation threshold but is too low for good matching?

Can I calculate small velocities when displacement precision is 1 pixel (10 m)? I see velocity about 0.1 - 1 m/day in the glacier of interest. Which means displacement only 1.2 - 12 meters upon using consequential images acquired with the interval of 12 days.

yes, please have a look at the answers of mengdahl and qglaude: Offset tracking - #27 by mengdahl

Thank you. They write that Offset tracking detects sub-pixel size movements. But how to estimate real precision? In SNAP?

Based on your nice description of this module, I think we can get the good and reasonable results by the correct setting of those parameters.

Grid Azimuth/Range Spacing Only determine how many GCP will be calculated.

Registration Window is Centered at one GCP cell. The slave and master registration windows are directly used to match for best coherence,without the involvement of a reference patch moving step-by-step.it can returns the final negative or positive range and azimuth offset for once. all in all, its done by some math tricks.

Finally, there is a Oversamping Factor used to get sub-pixel accuracy offset or velocity. of course also achieved by some math tricks.

if you want to get into the math tricks box, turn to( Question about Normalized Cross Correlation)

Thank you. This remark is valuable for me as I’m not good in math (FFT) and Java. And ok, offset can be calculated at once without moving step-by-step. But any case we must define what to search for (a patch) and where to search (search area in a slave image). And one of my questions was what are a patch and a search area in terms of SNAP? My assumption: patch = a subset around a GCP with a grid/azimuth range set up by the user, search area = registration window.

i guess there are many ways to calculate the offset between master and slave registration windows. one is by sliding a patch to match for best coherence, like the following:

however, the algorithm used in snap directly returns the offset between slave and master registration windows, then no need to know what to search for or where to search.

How its done is by math trick.i hope anybody can clarify it for us. But i guess it will need a thousand of word. its too complicated.

What do you call “math trick” ?

In the code you mentioned, the “trick” you call is simply oversampling in the frequency domain.

EDIT : the oversampling is implemented in SARUtils.java

Just a informal language style.“math trick” in this context equals “math magic”, exactly as the contents in SARUtils.java.

I Dont have the math background to understand the principal behind it , so i call it “math trick”, or it should be called “math magic”

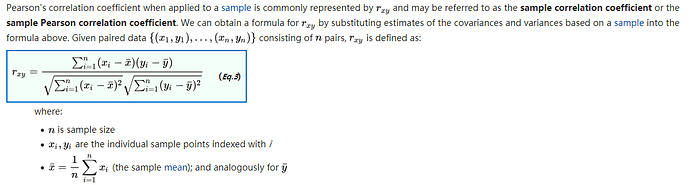

It sounds not convincing. At least we must define where to search (window size). This influences the time of calculation. And parameter n in the formula below defines the size of a patch.

I guess what he meant was that because image are coregistered, your “first guess” is on the exact location on both images. Then you search around.

-> For each pixel on the slave image around the location of a pixel in the master image, you compute the cross correlation in a small patch. The offset returned is the offset that maximizes the local cross correlation. That’s just an adaptation of image matching algorithm

Do we set up the size of a small patch in SNAP? And how?

No,we dont set up a small patch, and there is no such a template patch defined in the algorithm.

master registration window

master registration window

slave registration window

slave registration window

The algorithm directly calculates the offset between the slave and master registration windows, not the offset between two patches. Can you get it?

Finally, as how its calculated, turn to the code source.

This is a matter of terminology. Some use the term “matching window” or “registration window” others call it an “image patch” or “image chip”. I think the algorithms that do the matching in the spatial domain use “patch” or “chip”. Algorithms that do the offset calculation in the frequency domain, which SNAP uses, call it a “window”.

Is there a way to change the size of the matching patch or registration window in SNAP?

Well , as i noticed,at the end there is an invert Flourier transformation posed on the input registration window. I guess finally its calculated in spatial domain.

Yes, it’s not united in the domain referring to the input slave and master windows. we can hear many like “image patch”, “image chip”, “patch”, “chip”.Maybe the algorithm writer didn’t have that intention to distinguish.But its not important. its the input windows that matters.

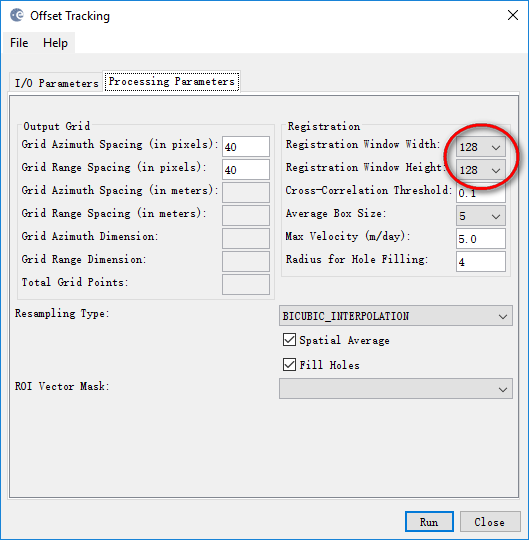

the input windows size is decided by the contents in red box. you can set up the size by change the parameter.

In spatial domain there are a ‘patch’ and a ‘search area’. Why not to use similar concepts in frequency domain? SNAP requires the size of ‘registration window’ would be greater then a maximum displacement. Such a requirement is applicable to a search area not to a patch.

Can anyone mention a publication where the complete procedure of offset tracking implemented in SNAP is presented mathematically/arithmetically? The very brief explanation in the product manual is not clarifying enough. In particular it is not clear how the algorithm calculates velocities along Azimuth / Range …

Thanks in advance

Sina

And a little bit different question on the algorithm. A candidate point in a slave image matches to a given reference GCP in a master image if cross-correlation criterion takes a maximum on this candidate point. What if cross-correlation criterion reaches the same maximum value on two different candidate points? What rules will SNAP use to choose the true matching point?

SNAP specifies no rule in case of same correlation value, meaning that the last one is the one retained.

However, this case is very likely to never happen, especially with 16 bits per pixel

Thank you. Probably some times SNAP chooses incorrect matching point because of many random factors. Is there a way to assess the probability that we have fixed a true matching point (and consequently the displacement)? In other words why and how much can I rely on SNAP offset tracking results?