Hi,

Is it possible to build a spectral library based on Sentinel-2 data in SNAP and can the spectral library be used for classification also in SNAP, for example using Maximum-Likelihood Classifier?

Thanks for any tips

Hi,

Is it possible to build a spectral library based on Sentinel-2 data in SNAP and can the spectral library be used for classification also in SNAP, for example using Maximum-Likelihood Classifier?

Thanks for any tips

There is no real spectral library in SNAP.

But if you want to classify something you can have a look at this thread:

Maybe this brings you already a bit further.

Another options is the spectral unmixing. This result can be further used to classify the image.

The endmembers needed for the spectral unmixing can be defined by setting pins and exporting them by using the Spectrum View. You should give names to the pins like water or forest before exporting them.

Thanks for the reply.

The classifications in this thread Supervised and unsupervised classification, Sentinel 2 are based on geometric training areas, not on spectral reflectance curves.

I’ve generated spectral reflectance curves using pins and Spectrum View and obtained abundances from Spectral Unmixing tool. How can I use them for classification?

Maybe my idea wasn’t a good one. What you could do is to define masks on the abundances.

Like ocean_abundance > 0.8 && forrest_abundance < 0.1. These masks could form your classes. But I think this not really reliable.

In the other thread they use areas. But I assume the spectra of the pixels are “averaged”. So the classes are also based on spectra. You could create very tiny vectors covering just one pixel.

But I can’t help further. I’m just a developer…

There are some spectral libraries being built, for example this on here: http://micromet.reading.ac.uk/spectral-library/. It would be of great interest and importance for future studies on urban development, health protection and energy consumption to have a way to map/classify structures/objects based on spectral libraries. In my case, I just would love to have this tool right now, for a study I’m doing about a specific material that has been applied in construction.

You can import these signatures (after a bit preparation) in the spectral unmixing tool of SNAP. It allows the fuzzy classification of materials in an image. Have a look at the help menu. It gives an example how the data should look.

Hi ABrun! Thank you for the reply! I’m already trying to apply your advise, but I’m having a bit of trouble to format/produce the desired csv file for the spectrum unmixing tool, it doesn’t recognize it (I/O: error format). Could you please help me with a little example how to do it? At least for one class?

Some doubts I have on the construction of the file are:

Can I save the file in UTF-8 system?

Should I use the decimal separator in " , " or " . " ?

If I want to use the B1-B8a and B11-B12 bands, should I create a spectrum file with only eleven data numbers, in this case: «443.0 490.0 560.0 665.0 705.0 740.0 783.0 842.0 865.0 1610.0 2190.0» or can I use instead:«348.1 349.7 351.3 352.9 354.5 356.1 357.6 359.2 360.8 362.4 363.9 365.5 367.1 368.7 370.2 371.8 373.4 374.9 376.5 378 …» for each reflectance value?

About the format/arragement of the data:

Can I copy a complete row for the wavelength from an excel table and copy it to txt file and do the same for reflectance, saving it then in the csv format, in a row way? for example the result could be like this?:

«wavelength 443.0 490.0 560.0 665.0 705.0 740.0 783.0 842.0 865.0 1610.0 2190.0

Material 3.894208979 4.947676348 5.098305633 5.1914169793916 5.35609296643912 5.086537707 4.883727736 4.035727057 4.052539254 4.053264186 4.059407778»

Or should I copy an entire column from the excel file and arrange it in the txt file in column way instead? Wavelength and Material reflectance side by side?

I hope its not much of trouble to help me with this. I would appreciate it a lot. Still, thank you so much for the guidance you have given.

I have posted a sample file which matches all criteria here: Weird result from the Spectral Unmixing of a Sentinel2

Tab delimited is best according to my experience, UTF-8 is fine.

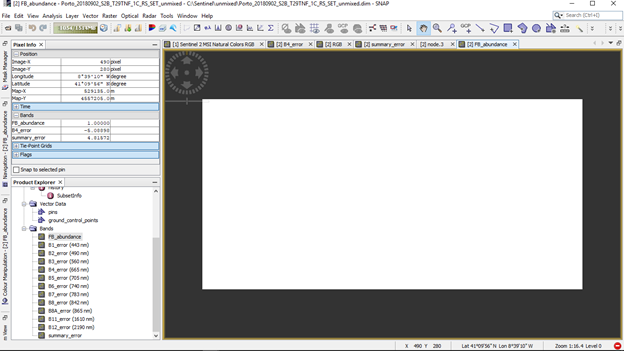

Thank you a lot ABrau, it worked! Now I’ve to read a little to understand the results. But I’m curious about the material abundance has a total blank image, with all the pixel «1» as value. Maybe I have to adjust the minimum band with another value diferent from 10nm or change the spectral unmixing model. I will place here the input values and output to share this experience, maybe something isn’t right.

Input:

output:

all pixels are equally probable for your one material.

Usually, you use several endmembers (materials) to train and the unmixing decides how much of each signature is represented in each pixel.

It is also important that the signature and the rasters have the same unit. Your signature is given in radiance? It would be better to have both the signature as well as the rasters in calibrated reflectance (0-1)

Why are your bands called “B1_error”? Seems to be the result of the unmixing already.

I think it worked, (but I will explore other hypothesis). I changed to unconstrained and it worked. Gone print screen the input and output:

input:

output:

Yes, it is in radiance, got it from this file: LUMA_SLUM_SW.csv

I will try also your advise about calibrated reflectance to see what happens (thank you!).

About the bands (B1_error) it was the result from previous unmixing product (sorry, my mistake). The input example it’s now correct, have a look.

Thank you so much for being so helpfull .

good job - congratulations!

If the input data produces this result, there might be no need for calibration. Just the output values (abundancies) will have to be interpreted with caution. Especially if you are using the unconstrained method, they are no longer representing fractional values (0-100%).

Ok. Thank you! I have to read much more about what is going on here (with spectral unmixing tool) to see if I can understand the results and apply further specifications, hypothesis, material reflectances, etc., to help me find way to identify for a given local , how much of a given material can we locate in buildings, for example.

I will come back to give a final result for what I’m looking for, if I achieve one. Thank you a lot!!

ABraun, for further thought (and I think it was one of the main questions on the start of this thread) it’s the following: If I want to classify (with a classifier algorithm) a given place, can I use this abundance results to add up to the source bands? Or maybe, use only the abundance band as result to use in the classifier… hum…  ? I need to dig deeper on this…

? I need to dig deeper on this…

yes, but only with the Random Forest classifier. It can use input bands of different units and measures. But it also needs larger numbers of input data because it is based on randomization of both training samples and input features.

More explanations on this: Number of training samples at Random forest classifier

ABraun I couldn’t go much further in the study I’m trying to do, because of the limitations I have in the number of Bands from Sentinel2 B1-B12 (MSI) . The spectral unmixing algorithm doesn’t produce much results with MSI, only with Hyperspectral images for what I can understand from this paper:10.1109/JSTARS.2012.2194696 and the tests I have done. So I’m trying to find data from Hyperspectral satallites, such as Hyperion (I don’t know if SNAP can read/import data from Hyperion) or others (non comercial). I don’t know if you have any advise you can give, it would be of great significance to me.

I was able to derive results from Sentinel-2 data as well. Of course, hyperspectral data is nice to have but not necessarily a prerequesite.